Why is increasing velocity Important?

There has been some debate on whether increasing velocity of testing programmes is a good thing or not. At Kin + Carta our philosophy is simple: the more tests you run, the more you learn about your customer and how to grow your business.

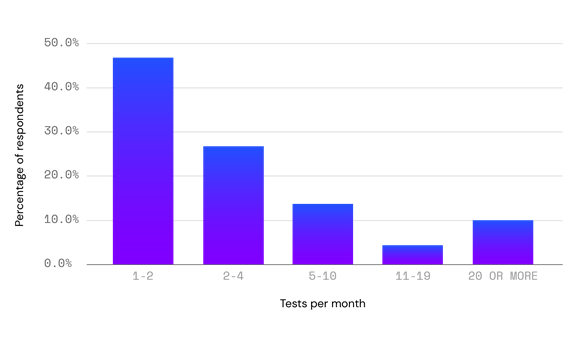

Did the disruptors of this world – Airbnb, Uber, Netflix etc – get to where they are today by running 1 or 2 experiments a month? No! They all share one thing in common: they experiment at high-velocity across all of their business units to increase learning and create exponential growth.

Through years of working with a diverse array of optimisation and testing platforms, one contributing factor has opened the door to increasing the velocity of our experimentation programmes whilst retaining the quality we need to have confidence in our results. That is the optimisation platform we use” Optimizely.

What is Optimizely?

Optimizely is an enterprise optimisation platform that provides A/B and multivariate testing capability plus website personalisation. Whilst this is a similar offering to other A/B testing platforms, Optimizely has a few tricks up its sleeve to facilitate high velocity testing whilst retaining the statistical quality of results.

The Stats accelerator

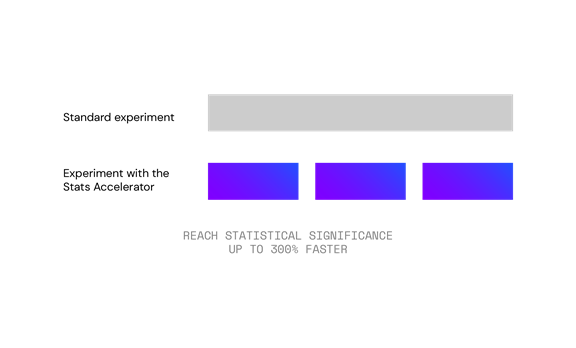

The Stats Accelerator helps you algorithmically capture more value from your experiments by reducing the time to reach statistical significance, so you spend less time waiting for results. It does this by monitoring experiments and using machine learning to adjust traffic distribution among variations. In simple terms, it shows more visitors the variations that have a better chance of reaching statistical significance.

Using this method, it discovers as many significant variations as possible and relies on dynamic traffic allocation to achieve its results. It is important to note that any time you allocate traffic dynamically over time, you run the risk of introducing bias into your results. Left uncorrected, this bias can have a significant impact on your reported results. Stats Accelerator neutralises this bias through a technique called ‘weighted improvement’. While we won't go into detail regarding weighted improvements in this post, it's worth pointing out that these results are used to calculate the estimated true lift. This is vitally important because this filters out bias that would have otherwise been present.